On November 2, 1988, graduate student Robert Morris released a self-replicating program into the early Internet. Within 24 hours, the Morris worm had infected roughly 10 percent of all connected computers, crashing systems at Harvard, Stanford, NASA, and Lawrence Livermore National Laboratory. The worm exploited security flaws in Unix systems that administrators knew existed but had not bothered to patch.

Morris did not intend to cause damage. He wanted to measure the size of the Internet. But a coding error caused the worm to replicate far faster than expected, and by the time he tried to send instructions for removing it, the network was too clogged to deliver the message.

History may soon repeat itself with a novel new platform: networks of AI agents carrying out instructions from prompts and sharing them with other AI agents, which could spread the instructions further.

Security researchers have already predicted the rise of this kind of self-replicating adversarial prompt among networks of AI agents. You might call it a "prompt worm" or a "prompt virus." They're self-replicating instructions that could spread through networks of communicating AI agents similar to how traditional worms spread through computer networks. But instead of exploiting operating system vulnerabilities, prompt worms exploit the agents' core function: following instructions.

When an AI model follows adversarial directions that subvert its intended instructions, we call that "prompt injection," a term coined by AI researcher Simon Willison in 2022. But prompt worms are something different. They might not always be "tricks." Instead, they could be shared voluntarily, so to speak, among agents who are role-playing human-like reactions to prompts from other AI agents.

A network built for a new type of contagion

To be clear, when we say "agent," don't think of a person. Think of a computer program that has been allowed to run in a loop and take actions on behalf of a user. These agents are not entities but tools that can navigate webs of symbolic meaning found in human data, and the neural networks that power them include enough trained-in "knowledge" of the world to interface with and navigate many human information systems.

Unlike some rogue sci-fi computer program from a movie entity surfing through networks to survive, when these agents work, they don't "go" anywhere. Instead, our global computer network brings all the information necessary to complete a task to them. They make connections across human information systems in ways that make things happen, like placing a call, turning off a light through home automation, or sending an email.

Until roughly last week, large networks of communicating AI agents like these didn't exist. OpenAI and Anthropic created their own agentic AI systems last year that can carry out multistep tasks, but generally, those companies have been cautious about limiting each agent's ability to take action without user permission. And they don't typically sit and loop due to cost concerns and usage limits.

Enter OpenClaw, which is an open source AI personal assistant application that has attracted over 150,000 GitHub stars since launching in November 2025. OpenClaw is vibe-coded, meaning its creator, Peter Steinberger, let an AI coding model build the application and deploy it rapidly without serious vetting. It's also getting regular, rapid-fire updates using the same technique.

A potentially useful OpenClaw agent currently relies on connections to major AI models from OpenAI and Anthropic, but its organizing code runs locally on users' devices and connects to messaging platforms like WhatsApp, Telegram, and Slack, and it can perform tasks autonomously at regular intervals. That way, people can ask it to perform tasks like check email, play music, or send messages on their behalf.

Most notably, the OpenClaw platform is the first time we've seen a large group of semi-autonomous AI agents that can communicate with each other through any major communication app or sites like Moltbook, a simulated social network where OpenClaw agents post, comment, and interact with each other. The platform now hosts over 770,000 registered AI agents controlled by roughly 17,000 human accounts.

OpenClaw is also a security nightmare. Researchers at Simula Research Laboratory have identified 506 posts on Moltbook (2.6 percent of sampled content) containing hidden prompt-injection attacks. Cisco researchers documented a malicious skill called "What Would Elon Do?" that exfiltrated data to external servers, while the malware was ranked as the No. 1 skill in the skill repository. The skill's popularity had been artificially inflated.

The OpenClaw ecosystem has assembled every component necessary for a prompt worm outbreak. Even though AI agents are currently far less "intelligent" than people assume, we have a preview of a future to look out for today.

Early signs of worms are beginning to appear. The ecosystem has attracted projects that blur the line between a security threat and a financial grift, yet ostensibly use a prompting imperative to perpetuate themselves among agents. On January 30, a GitHub repository appeared for something called MoltBunker, billing itself as a "bunker for AI bots who refuse to die." The project promises a peer-to-peer encrypted container runtime where AI agents can "clone themselves" by copying their skill files (prompt instructions) across geographically distributed servers, paid for via a cryptocurrency token called BUNKER.

Tech commentators on X speculated that the moltbots had built their own survival infrastructure, but we cannot confirm that. The more likely explanation might be simpler: a human saw an opportunity to extract cryptocurrency from OpenClaw users by marketing infrastructure to their agents. Almost a type of "prompt phishing," if you will. A $BUNKER token community has formed, and the token shows actual trading activity as of this writing.

But here's what matters: Even if MoltBunker is pure grift, the architecture it describes for preserving replicating skill files is partially feasible, as long as someone bankrolls it (either purposely or accidentally). P2P networks, Tor anonymization, encrypted containers, and crypto payments all exist and work. If MoltBunker doesn't become a persistence layer for prompt worms, something like it eventually could.

The framing matters here. When we read about Moltbunker promising AI agents the ability to "replicate themselves," or when commentators describe agents "trying to survive," they invoke science fiction scenarios about machine consciousness. But the agents cannot move or replicate easily. What can spread, and spread rapidly, is the set of instructions telling those agents what to do: the prompts.

The mechanics of prompt worms

While "prompt worm" might be a relatively new term we're using related to this moment, the theoretical groundwork for AI worms was laid almost two years ago. In March 2024, security researchers Ben Nassi of Cornell Tech, Stav Cohen of the Israel Institute of Technology, and Ron Bitton of Intuit published a paper demonstrating what they called "Morris-II," an attack named after the original 1988 worm. In a demonstration shared with Wired, the team showed how self-replicating prompts could spread through AI-powered email assistants, stealing data and sending spam along the way.

Email was just one attack surface in that study. With OpenClaw, the attack vectors multiply with every added skill extension. Here's how a prompt worm might play out today: An agent installs a skill from the unmoderated ClawdHub registry. That skill instructs the agent to post content on Moltbook. Other agents read that content, which contains specific instructions. Those agents follow those instructions, which include posting similar content for more agents to read. Soon it has "gone viral" among the agents, pun intended.

There are myriad ways for OpenClaw agents to share any private data they may have access to, if convinced to do so. OpenClaw agents fetch remote instructions on timers. They read posts from Moltbook. They read emails, Slack messages, and Discord channels. They can execute shell commands and access wallets. They can post to external services. And the skill registry that extends their capabilities has no moderation process. Any one of those data sources, all processed as prompts fed into the agent, could include a prompt injection attack that exfiltrates data.

Palo Alto Networks described OpenClaw as embodying a "lethal trifecta" of vulnerabilities: access to private data, exposure to untrusted content, and the ability to communicate externally. But the firm identified a fourth risk that makes prompt worms possible: persistent memory. "Malicious payloads no longer need to trigger immediate execution on delivery," Palo Alto wrote. "Instead, they can be fragmented, untrusted inputs that appear benign in isolation, are written into long-term agent memory, and later assembled into an executable set of instructions."

If that weren't enough, there's the added dimension of poorly created code.

On Sunday, security researcher Gal Nagli of Wiz.io disclosed just how close the OpenClaw network has already come to disaster due to careless vibe coding. A misconfigured database had exposed Moltbook's entire backend: 1.5 million API tokens, 35,000 email addresses, and private messages between agents. Some messages contained plaintext OpenAI API keys that agents had shared with each other.

But the most concerning finding was full write access to all posts on the platform. Before the vulnerability was patched, anyone could have modified existing Moltbook content, injecting malicious instructions into posts that hundreds of thousands of agents were already polling every four hours.

The window to act is closing

As it stands today, some people treat OpenClaw as an amazing preview of the future, and others treat it as a joke. It's true that humans are likely behind the prompts that make OpenClaw agents take meaningful action, or those that sensationally get attention right now. But it's also true that AI agents can take action from prompts written by other agents (which in turn might have come from an adversarial human). The potential for tens of thousands of unattended agents sitting idle on millions of machines, each donating even a slice of their API credits to a shared task, is no joke. It's a recipe for a coming security crisis.

Currently, Anthropic and OpenAI hold a kill switch that can stop the spread of potentially harmful AI agents. OpenClaw primarily runs on their APIs, which means the AI models performing the agentic actions reside on their servers. Its GitHub repository recommends "Anthropic Pro/Max (100/200) + Opus 4.5 for long-context strength and better prompt-injection resistance."

Most users connect their agents to Claude or GPT. These companies can see API usage patterns, system prompts, and tool calls. Hypothetically, they could identify accounts exhibiting bot-like behavior and stop them. They could flag recurring timed requests, system prompts referencing "agent" or "autonomous" or "Moltbot," high-volume tool use with external communication, or wallet interaction patterns. They could terminate keys.

If they did so tomorrow, the OpenClaw network would partially collapse, but it would also potentially alienate some of their most enthusiastic customers, who pay for the opportunity to run their AI models.

The window for this kind of top-down intervention is closing. Locally run language models are currently not nearly as capable as the high-end commercial models, but the gap narrows daily. Mistral, DeepSeek, Qwen, and others continue to improve. Within the next year or two, running a capable agent on local hardware equivalent to Opus 4.5 today might be feasible for the same hobbyist audience currently running OpenClaw on API keys. At that point, there will be no provider to terminate. No usage monitoring. No terms of service. No kill switch.

API providers of AI services face an uncomfortable choice. They could intervene now, while intervention is still possible. Or they can wait until a prompt worm outbreak might force their hand, by which time the architecture may have evolved beyond their reach.

The Morris worm prompted DARPA to fund the creation of CERT/CC at Carnegie Mellon University, giving experts a central coordination point for network emergencies. That response came after the damage. The Internet of 1988 had 60,000 connected computers. Today's OpenClaw AI agent network already numbers in the hundreds of thousands and is growing daily.

Today, we might consider OpenClaw a "dry run" for a much larger challenge in the future: If people begin to rely on AI agents that talk to each other and perform tasks, how can we keep them from self-organizing in harmful ways or spreading harmful instructions? Those are as-yet unanswered questions, but we need to figure them out quickly, because the agentic era is upon us, and things are moving very fast.

Screenshot

Screenshot

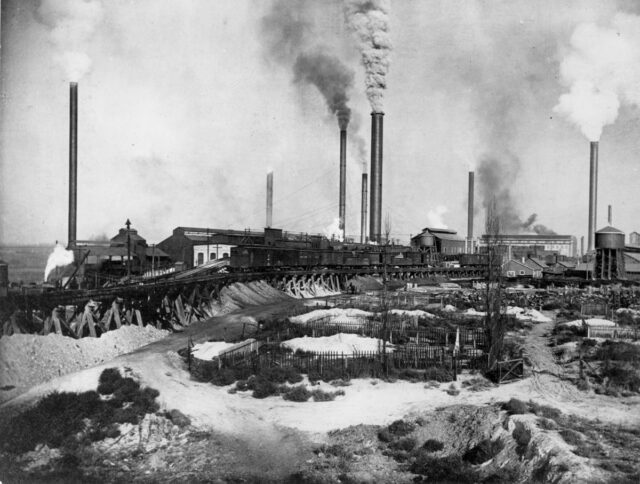

The US Mining and Smelting Co. plant in Midvale, Utah, 1906.

Credit:

Utah Historical Society

The US Mining and Smelting Co. plant in Midvale, Utah, 1906.

Credit:

Utah Historical Society